Always backup your WordPress site before making changes to important files and make copies of any files that you are about to modify.

Always backup your WordPress site before making changes to important files and make copies of any files that you are about to modify.

If you don’t want to edit WordPress files yourself, ask an experienced person to help you.

***

![]()

WordPress generates a virtual robots.txt file if there is no physical robots.txt file found on your server. In this tutorial, you will learn how to create and upload a physical robots.txt file to your server to override the virtual file, how to edit your robots.txt file and how to test your robots.txt file instructions to search engines.

Robots File

A robots.txt file instructs search engine spiders and co-operative ‘bots’ about the parts of your website that you want to keep private, and which areas they can and cannot access. Bots, or ‘robots’, are programs used by search engines like Google and other software to gather information for their databases.

About robots.txt

The following entry is sourced from robotstxt.org:

Web site owners use the /robots.txt file to give instructions about their site to web robots; this is called The Robots Exclusion Protocol.

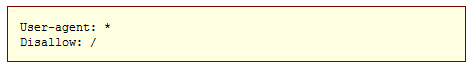

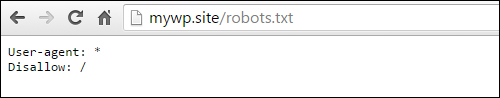

It works likes this: a robot wants to visit a website URL, say http://www.yourdomain.com/welcome.php. Before it does so, it firsts checks for http://www.yourdomain.com/robots.txt, and finds:

The “User-agent: *” part contains an asterisk.

As stated in Wikipedia,

In software, a wildcard character is a single character, such as an asterisk (*), used to represent a number of characters or an empty string. It is often used in file searches so the full name need not be typed.

(Source: Wikipedia)

This means that this section applies to all robots.

The “Disallow: /” part tells the robot that it should not visit any pages on the site.

There are two important things to consider when using /robots.txt:

- Robots can ignore your /robots.txt instructions. This includes malware robots that scan the web for security vulnerabilities and email address harvesters used by spammers.

- The /robots.txt file is a publicly available file. Anyone can see what sections of your server you don’t want robots to use.

So, don’t use /robots.txt to try and hide information.

If you need to protect content on your WordPress site, see the tutorial below:

Accessing Your Robots File

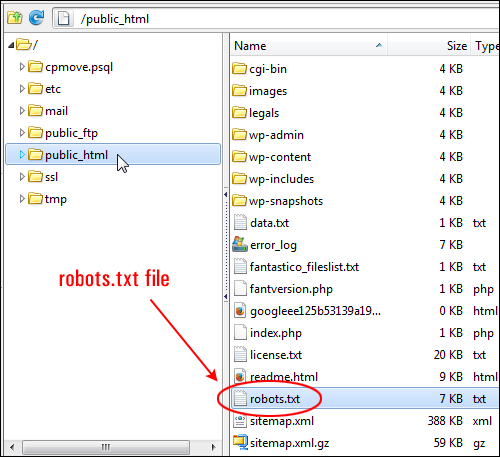

Your robots.txt file must be located in the same directory as your WordPress installation …

(Location of robots.txt file)

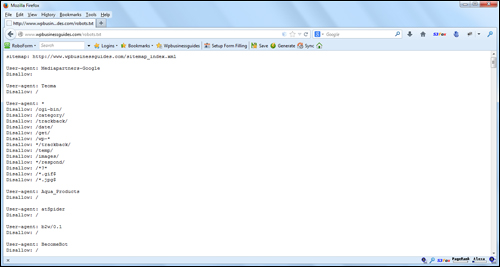

To view your site’s robots.txt file after it has been installed, simply type your site’s URL into your browser and add “robots.txt” to the end of the URL, e.g.:

http://www.yoursite.com/robots.txt

This will bring up your robots.txt file …

(robots.txt file)

Configuring Your Robots File

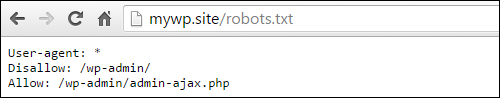

Typically, with a new WordPress installation, if you configure your WordPress Reading Settings to allow search engines to index your site, your robots.txt file will look something like this …

(WordPress Reading Settings – Search Engine Visibility Allowed)

If you disable the ‘Search Engine Visibility’ option in the Reading settings, the instructions in your robots.txt file will be modified to discourage search engines from crawling your site …

(WordPress Reading Settings – Search Engine Visibility Disallowed)

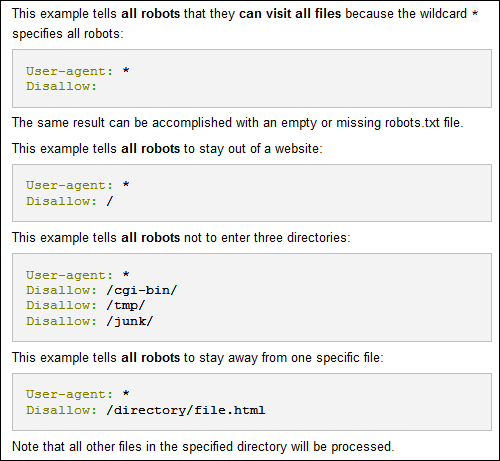

A robots.txt file can be configured in many different ways, depending on what instructions you want to give to search engine spiders and other visiting bots (robots).

Here are just some examples …

(Examples of robots.txt file instructions. Source: Wikipedia)

How To Create A Robots.txt File – Step-By-Step Tutorial

![]()

Before creating a robots.txt file, we recommend creating an XML sitemap for your site.

- If you plan to use a WordPress SEO plugin like Yoast SEO (recommended), the plugin will automatically create an XML sitemap for your website and add these to your WordPress installation.

- If you do not plan to use the Yoast SEO plugin, you can use a standalone WordPress XML Sitemap plugin like Google XML Sitemaps to generate an XML sitemap for your site.

Let’s show you how to manually create, configure and upload a robots.txt file to your server that performs a number of important functions …

(robots.txt file)

At the very top of the robots.txt file (i.e. the first line) you will add a link to your site’s XML sitemap. This sitemap enables search engines to find and index all of your site’s pages faster.

Sitemap: http://www.yoursite.org/sitemap.xml

Under the sitemap entry, a recommended entry is Google’s ‘Mediapartners’ agent. By allowing this agent access, you prevent white space or public service ads from appearing on your pages if you have added Google AdSense to your site. This happens if Google has not had the opportunity to index a page on your site with Google AdSense ads or determine what the page is about yet.

The entry looks like this:

User-agent: Mediapartners-Google Disallow:

The rest of the text in your file can be organized however you like. In the sample robots.txt file provided below, you’ll see that the file text has been organized into segments or groupings for ease of readability and management.

In our sample robots.txt file, two groupings are preceded by “User-agent: *” followed by a list of directories or files with each list item preceded by “Disallow“. This represents the directories and files we don’t want search engines to access.

For example, if you have a folder on your site called “private” that you do not want search robots to crawl, then you would add the following line into your robots.txt file:

Disallow: /private/

Of particular note are the image files – since searches for some images (e.g. system images) can result in non-profitable access to your site, you may want to disallow search engines from accessing these.

What follows these sections in our example robots.txt file is a long list of user-agents that are prevented from accessing the site. These are selected not necessarily because they are ‘bad’ but because they would simply use up your server’s bandwidth and other resources if allowed to access and spider your site. The entries for these items look similar to the example below:

User-agent: ia_archiver Disallow: /

Having “/” as a “Disallow” value prevents any access to any file or directory on your site.

Adding A Robots.txt File To Your Site

Click the share button below to download sample robots.txt files that you can use for your own site:

![]()

If using the sample robots.txt file provided above, feel free to copy and use the file as is, but make sure to make the changes indicated in the file as shown below:

Modify the portions in red (i.e. replace yourdomain.com with your URL), and remove the instructions (including the brackets), then resave and reupload the file to your server (see the next section for details on adding a robots.txt file to your server).

Editing Your Robots.txt File

If using the sample robots.txt file provided, either download the zip file provided above or click on the link provided to view the robots.txt file in a new browser window.

Do one of the following:

Option #1

- Click “File” > “Save Page As …”

- Save the page to a location on your hard drive. The file will be saved as “robots.txt”

- Open up the file and change the first line of the file as per the above instructions.

- Resave and upload the robots.txt file via FTP to the root directory of your site.

Option #2

- Select all of the content in the browser window and copy it to your clipboard.

- Create a new plain text file (e.g. notepad).

- Paste the contents of your clipboard into the text file.

- Save the file as “robots.txt”

- Open up the file and change the first line of the file as per the above instructions.

- Resave and upload via FTP to the root directory of your site.

After uploading the robots.txt file to your server, verify that it has been uploaded correctly (i.e. open a browser and go to http://www.yoursite.com/robots.txt).

Also, verify the following:

- All the folders you want disallowed have been entered correctly

- “Disallow” is correctly defined. For example, “Disallow: /” means no access at all, “Disallow:” (with no proceeding value) means full access.

- You have not left spaces or extra characters. Search engines are very particular and may not respond in the way you expect them to. For example, too many spaces between groupings is not advised (one is ok). Also, special characters like ‘#’ have special meaning in the robots.txt file so be sure to leave those out.

Testing Your Robots.txt File

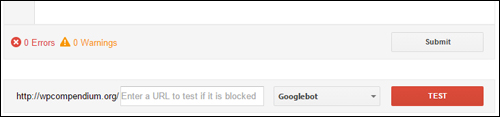

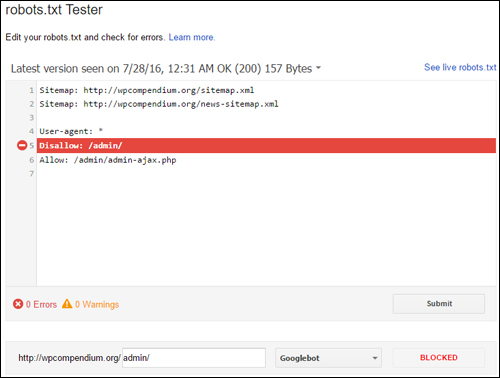

You can test your robots.txt file using a checking tool like the robots.txt Tester provided by Google Search Console (formerly Google Webmaster Tools).

![]()

To use the robots.txt file checker tool you will need to have a Google Search Console account set up.

If you haven’t set up your webmaster accounts yet, see the tutorial below:

To test a site’s robots.txt file, do the following:

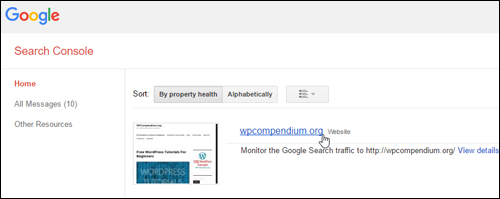

Log into your Google Search Console account …

(Google Search Console Login)

On the Google Search Console home screen, click the site you want to check …

(Google Search Console Home Screen)

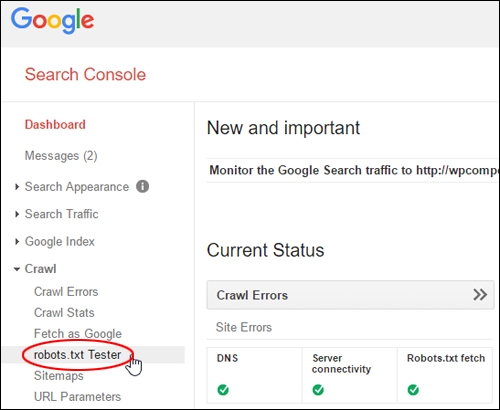

In the Search Console menu, select Crawl > robots.txt Tester …

(Crawl > robots.txt Tester)

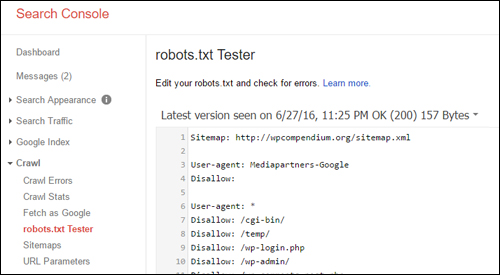

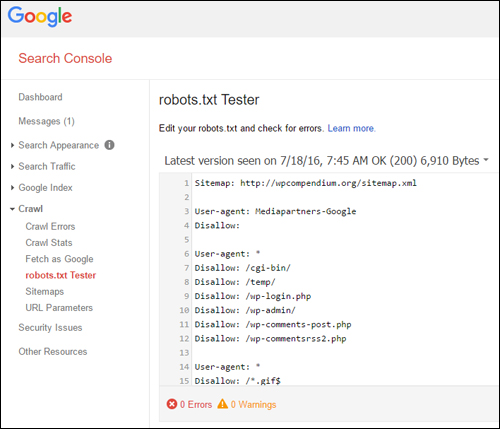

This brings you to the robots.txt Tester screen …

(robots.txt Tester screen)

If your site has been set up correctly and already crawled by Google, the tool will populate the fields on this screen with information about your site.

You can view the content of your robots.txt file and check for errors or warnings …

(Inspect your robots.txt file content)

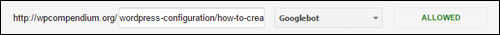

You can also enter URLs into a URL test field, select the user-agents you want to test against from the ‘User-agents’ drop-down menu list (e.g. Googlebot, Googlebot mobile, Mediapartners-Google, etc.) …

(Test your URLs to see if any are blocked)

Click the Test button to analyze your site and view your results.

The tool will show you if the URL or directory is allowed (i.e. indexable by Google) …

(Allowed – Google will crawl this URL or directory)

Or if the URL or directory is not allowed …

(Blocked – Google will not crawl this URL or directory)

![]()

Any changes you make in this tool will not be saved. To save any changes, you’ll need to copy the contents and paste them into your robots.txt file.

![]()

Refer to the Google Webmaster Tools Help documentation for more details on how to use the tools and how to analyze your results.

Robots.txt – Additional Information

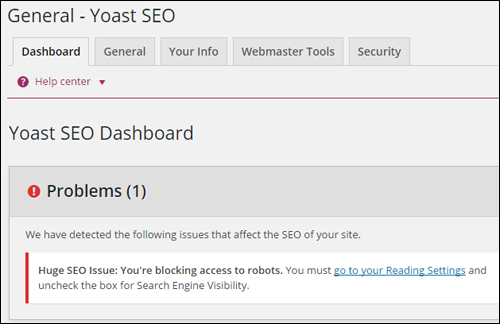

If you have a WordPress SEO Plugin like Yoast SEO installed and discourage search engines from indexing your site (see How To Configure WordPress Reading Settings), you will block access to robots and get an error message like the one shown below …

(Blocking access to robots can affect some SEO plugin settings)

This makes sense … why would you want to optimize your website for search engines if you are instructing WordPress to block access to search engines from visitng your site?

To learn more about configuring WordPress SEO Plugins, see the tutorial below:

To learn more about configuring your WordPress Reading Settings, see the tutorial below:

To learn how to effectively block your WordPress site from being accessed by search engines, see the tutorial below:

For technical information on the benefits and advantages of using a robots.txt file, visit this site:

Congratulations! Now you know how to add a robots.txt file to prevent search engines and compliant bots from indexing pages or sections of your site.

(Source: Pixabay)

***

"I have used the tutorials to teach all of my clients and it has probably never been so easy for everyone to learn WordPress ... Now I don't need to buy all these very expensive video courses that often don't deliver what they promise." - Stefan Wendt, Internet Marketing Success Group

***